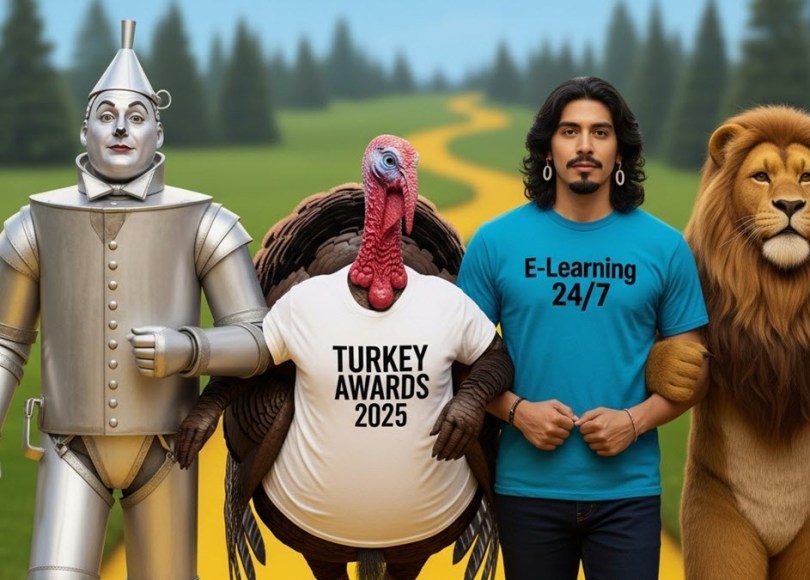

It’s that time of the year for one of the most popular awards in the entire world.

Call it exclusive, because, well, uh, it is.

Not every learning technology, including learning systems, e-learning tools, and the big Turkey for 2025, can win.

I know it’s definitely a bummer.

This year, the categories really should make you smile, or for those vendors, take out their dart board and throw darts.

There is one day I hope a vendor will post their award on their site or in their marketing.

Multi-winner SuccessFactors didn’t qualify this year.

I know, many of you are bummed, while others are saying, “Who?”

The categories are

- Why is that feature/function in your system?

- The AI term nobody understands unless that person is knowledgeable about the technical aspects of AI

- AI spin

- DevLearn Turkey (I know, but like I said, lots to digest (Get it?)

- Turkey of the Year 2025

Category One – Why is that feature/function in your system?

Every year, vendors love to toss terms and spin capabilities in their system that any system can do; however, for whatever reason, people think it is unique – the vendor pushes it as such – and the logic behind it is baffling and ambiguous enough to get people enthralled.

For me, it makes no sense.

Nominees are

Micro-learning

That is the term. It is not microlearning (it’s missing the hyphen).

Secondly, every system on the entire e-learning planet since 2000 (even one vendor that went modem back in 1993) could do micro-learning.

For whatever reason, there are vendors out there pushing their system as a microlearning platform – ignoring the actual spelling.

Micro means short – and actually, if you are creating content for micro, the total running time should be under three minutes (if assuming the person goes straight through, rather than focuses on an area of interest, which is why it was established).

A vendor who pitches micro-learning, but fails to mention that it is say by chapter, so you could have a “micro-course” that is more than 50 minutes in time, again assuming that you are going straight through, and based upon some weird calculation on how fast someone can take a course or content.

Since they personally do not know your learning style, your learning retention rate, or that e-learning was created so you can go as fast or slow as you want, repeat to learn more, the idea of micro-learning is at best misleading and at worst disingenuous.

Flow of Work

I won’t mention the vendors, but a couple launched a platform around the flow of work.

Since FOW (I will use this to represent flow of work) is doable in the majority of systems targeting the corporate space, sans associations, non-profits, and any entity that views e-learning (online learning) differently (than traditional-typical), my perspective is that they are duds.

Flow of Work sounds nice – it gives off some impression that what it can do can’t be achieved with a variety of systems targeting employees (because FOW’s goal is that audience) is nothing more than a nice term that an L&D person(s) (not everyone) request or a vendor looks at enough use cases and thinks, “winner.”

I should add that the flow of work is not the same thing as the flow of learning.

Ok, next!

Client(s) wanted it, so we have it

The absolute worst.

My philosophy around this attitude is quite simple: “You (the vendor) should be the experts, not your client(s).”

Ask a client what they want to see in a system, and you might be surprised by their answer.

Vendors who go this route often will note that they asked a set of clients what they wanted (it’s never everyone, especially those who never use the system – and thus the question should be why), and bam, here it is.

OR large enterprise clients ask for it and zam it goes in there.

If your roadmap is over 10% client requests, you should stop selling a platform and move into the shoe business at a local department store.

I have seen way too many systems go down the rabbit hole, ignoring the market trends, forecasts, and just reality – especially Impact of Learning, which is what you want, that never hit their stride.

This approach costs you (vendor) time and resources. And for what?

A broad audience using it, or just a select few who wanted it in the first place.

The client(s) wanted it, so we added it (aka roadmap is over 50% client driven)

Category two – AI term nobody understands unless that person is knowledgeable about the technical aspects of AI

Rather than going into too many details, let’s just cut to the chase and announce the winner: you. Depending on what system you are using or plan to use in late 2025, it’s definitely a big time term for vendors to use: MCP.

MCP means Model Content Protocol.

It’s an open architecture standard that connects AI applications to external systems.

In our industry, vendors in the learning tech system segment – especially here, while tapping into this, some already have, you will see a lot more in 2026. This will be big.

It isn’t a turkey from the standpoint of what it can do – in fact, it is a big win; however, unless you understand all the technical nuances of AI within your learning system, it is a hot term that people go “ok” without knowing it.

Congrats MCP.

Category Three – AI Spin

Ever wondered what the Wild West in the States was like back in the 1880s?

What about the Middle Ages in Europe?

Lots of stuff and terms and ideas tossed around to get people to go “ooh, ahh,” and with a glaze in their eyes, buy it – ignoring the reality.

Nominees are

100% Accurate

This is just not true.

Vendors love to mention RAG, guardrails, your own content (compared to the web), trained data, and so on to give the impression that, with all those items and more, the output is 100% accurate.

A bonus is they will say that none of their clients have reported any issues or found fake or false information – ignoring the fact that the learners – employees, lets say – using the system wouldn’t know – because they are either unaware or if they are aware of hallucination,s they are unaware of the situtation thinking that what they see must be real.

I don’t care if the vendor has a dozen LLMs, built by their own hands and tossed in with commercial and open-source LLMs, with RAGs, guardrails, and the list goes on.

The moment it goes sideways – i.e., something is inaccurate and impacts the company/department or the individual – the vendor will NOT take any responsibility.

The universal feedback I hear from vendors is “That’s not our fault.”

Awful – it is your fault if you say that your AI output is 100% accurate.

As a bonus, the vendors who push either 100% accuracy claims will say that the person-admin, btw, can edit it, as though the idea that the admin knows the differences and can spot them is ludicrous.

Let me tell you a little secret that search engine providers are seeing as well as researchers who study the AI responses you see on the web when you search.

a. People are just accepting what they see as accurate

b. The percentage of people clicking to go to the website directly is dropping – why go when you see the response you are asking for?

c. Lower numbers checking the sources

Now, if the masses are going this route, and those masses include the people using your system, what makes you, the vendor, think that everyone knows?

I was about to add other nominees, but I then realized that the turkey of AI spin for 2025 is

100% Accuracy – Not the Vendor’s fault if it is wrong (according to the vendor)

Category Four -DevLearn Turkey 2025 Award

It’s simple, really.

People go to the show for the seminars/learning with the expo-trade show as either a key component or a secondary focus to see what is out there.

For a vendor, the goal is to get a human or humans interested in seeing the product – ideally at the show – and be provided responses that align with their questions.

After all, think of it as a fact-finding mission.

That person may not be the decision maker.

The goal of a trade show is to be seen.

The cost for a vendor to attend these shows is high.

Way too many vendors attend a lot of shows, and can’t validate the close ratio or average close time per prospect.

When a vendor has dropped those funds, which can easily exceed 100K depending on the show, booth size, and location (critical), the ROI offset just isn’t there.

This all slides – okay, a lot – under marketing.

Marketing is a term that anyone can understand.

You see it every day – on the web, using the Kindle (assuming you went with the ad version), on television, on radio, and on and on – even at your grocery store, bodega, or mercado.

Anyway, as I noted before, many vendors failed at this concept. Yes, they got leads – everyone does.

Nominees are

Sana Labs – The Workday fail is big. Not only will Sana be tapped into Workday Learning, but AI agents will also be widely used in the Workday platform (it turns out that some WD HRIS people are under the impression it will be, while others are not so sure).

Anyway, Workday could have been leveraged here, since the announcement was made before Workday Convergence in Sept, plus the close was ten days before DevLearn – more than enough time to have something done and ready to go for the show (something called probability plays here, so the excuse of not enough time is lame)

Acorn PLMS – Going Anti-Swag with a maze booth approach and comments from people is the best way to market, but it is a colossal fail.

Focus on the product – your system – not what Martha thinks.

Martha isn’t me, and they may not have the same use case as me.

Sheesh – this isn’t difficult.

Oh, a huge miss fail on not having an acorn plush toy or something playing off your name ACORN.

BTW, when someone types in PLMS in any search engine, it doesn’t return “Performance Learning Management System” – it returns a lot of things, just not that.

Dump PLMS, which is hard to say multiple times, and go to Acorn Talent Development System or Platform (whichever vernacular you prefer).

OR go back to Acorn LMS – because many LMSs are adding more and more options around TDS, but not enough to be a TDS.

Litmos – The whole arcade thing still bothers me.

Why drop all that cash on those plush dolls with your marketing dude’s yellow face with glasses on it – aligned from day one with your system – then make it difficult for people to get one?

Might as well have an oxygen bar, which costs lots of money and was another marketing fail (wait, you did that).

getAbstract – Hardcover books in the booth. You completed a survey that likely captured your data to get one of the books they had on the booth counter for pickup.

Funny, I thought getAbstract was an e-learning content provider with a twist.

GA has been around a long time, so this isn’t their first rodeo, as they say.

Who honestly thought this was a huge marketing win?

getAbstract.

You could have noted the different topic types, indicated whether you were in an aggregator, listed which vendors sell your content, and so on.

Let’s see an example – that could have made sense.

But noooo.

Hardcover books.

Unbelievable.

Turkey of the Year, 2025

I know, it is that time.

This award, the Turkey, represents not Thanksgiving, but rather just a turkey.

I have tried Vegan turkey-like things; being vegan sort of forces you to attempt to find something similar (if you were a meat-eater before).

Vegan turkey roast with stuffing is not something I recommend. Acquired taste, I guess.

Ditto on Tofurkey.

Best to go with a Vegetable dish with Quinoa stuffing, right there waiting for you.

Or go for Lion’s Mane Steaks or Oyster Mushrooms steaks – strongly recommend.

Does this have anything to do with the 2025 Turkey of the Year Award?

Nope.

Just a sidebar to build up the anticipation for the Turkey of the Year.

Nominees are

- SanaLabs

- Acorn PLMS

- getAbstract

- AI accuracy 100% Misnomer (oh, did I mention if things go sideways – it’s not the vendor’s fault – according to the vendor)

- AI term nobody understands – the buyer, unless they understand the tech term for it – MCP

The misnomer that AI is accurate (implying 100%) without saying it, or just saying it.

Congrats!

To our second-place winner, Martha wants a hardcover book from getAbstract.

Please send it to Martha Washington

Or her husband, George.

E-Learning 24/7